Decoding the Digital World: Unveiling the Difference Between Bit and Byte

In the intricate world of computers and digital technology, understanding the fundamental units of information is crucial. At the very core of everything digital lies the bit and the byte – two terms that often cause confusion. While seemingly simple, the difference between bit and byte is profound, impacting everything from how your files are stored to how quickly you can download a movie. This article delves into these essential concepts, providing a clear and comprehensive explanation of their roles, significance, and the critical difference between bit and byte.

The Bit: The Atomic Unit of Information

Think of a bit as the smallest unit of information in a computer. It’s the digital equivalent of an atom, the building block of all digital data. The word “bit” is a portmanteau of “binary digit.” A bit can represent one of two states: 0 or 1. This binary system, based on the presence or absence of an electrical signal, is the language computers understand. It’s a simple on/off switch. These 0s and 1s are combined to represent more complex data, such as numbers, letters, and images. The difference between bit and byte becomes clearer when you consider their individual capacities.

These binary digits are the foundation upon which all digital processes are built. Everything from the text you are reading now to the complex algorithms that power artificial intelligence is ultimately represented by combinations of bits. The efficiency and speed of a computer system often depend on how quickly it can manipulate and process these individual bits.

How Bits Work in Practice

Imagine a light switch. The switch can be either on (represented by 1) or off (represented by 0). That’s the basic principle behind a bit. In a computer, this “switch” is implemented using electronic components, such as transistors, that can be in one of two states. These states can represent different things, such as the presence or absence of an electrical charge or the direction of a magnetic field.

While a single bit is limited in what it can represent, combining multiple bits allows for the encoding of more complex information. This is where the concept of a byte comes into play. Understanding the individual difference between bit and byte is key to understanding how data is structured.

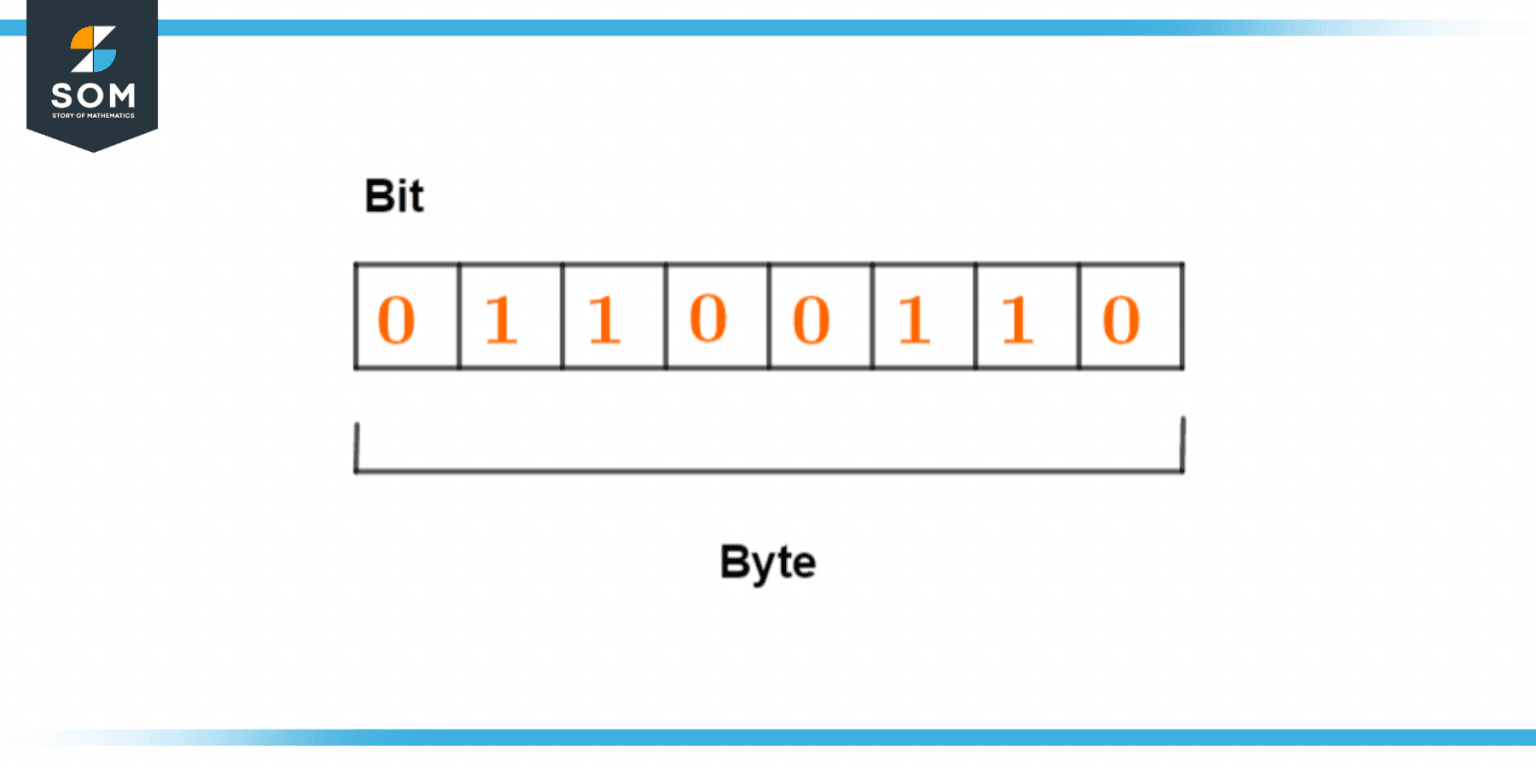

The Byte: A Collection of Bits

A byte is a group of bits, typically eight. It’s the standard unit used to measure data storage size. Think of a byte as a word, while a bit is a letter. A single byte can represent 256 different values (2 to the power of 8). This allows a byte to represent a single character, a small number, or part of a larger piece of data. The difference between bit and byte becomes more apparent when we consider that a byte is, in essence, a collection of bits working together.

The use of bytes simplifies how computers handle and store data. Instead of dealing with individual bits, which would be cumbersome, computers can work with bytes as a unit. This makes data processing and storage much more efficient. The difference between bit and byte lies in their scale and application.

The Significance of Eight Bits

The decision to use eight bits in a byte wasn’t arbitrary. It’s a practical choice that balances efficiency and representation. Eight bits provide enough combinations to represent all the characters on a standard keyboard (letters, numbers, symbols) and a range of other data. This standard has become deeply ingrained in computer architecture. The difference between bit and byte is not just a matter of quantity; it’s about the practical organization of digital information.

While other byte sizes have been used in the past, the eight-bit byte has become the dominant standard due to its versatility and ease of use. It’s why you see storage sizes measured in kilobytes, megabytes, gigabytes, and terabytes, all derived from the byte.

Illustrating the Difference: Bit vs. Byte

To further clarify the difference between bit and byte, consider the following analogy: Imagine you’re building a LEGO structure. Each individual LEGO brick is like a bit. It can either be present or absent, connected or not connected. A group of eight LEGO bricks, connected together, forms a small structure, like a small block or a small part of a larger model. This small structure is like a byte. A single LEGO brick (bit) is limited, but a group of eight (byte) allows for more complex representations.

Here’s a table summarizing the key differences:

| Feature | Bit | Byte |

|---|---|---|

| Definition | Smallest unit of data | Group of 8 bits |

| Representation | 0 or 1 | 256 possible values |

| Use | Basic unit of digital information | Used to measure data storage and transfer |

This table highlights the fundamental difference between bit and byte in a clear and concise manner.

Practical Implications: Data Storage and Transfer

The difference between bit and byte has significant implications for data storage and transfer. When you purchase a hard drive, you’re buying a certain number of bytes of storage. When you download a file, the download speed is often measured in bits per second (bps) or bytes per second (Bps). Understanding the difference helps interpret these metrics correctly.

Storage Capacity

Storage capacity is typically measured in bytes. A kilobyte (KB) is 1,024 bytes (or 210 bytes), a megabyte (MB) is 1,024 KB, a gigabyte (GB) is 1,024 MB, and a terabyte (TB) is 1,024 GB. The larger the storage capacity, the more bytes (and therefore bits) the device can hold. This directly relates to the amount of data you can store, such as documents, photos, and videos. Knowing the difference between bit and byte is crucial for making informed decisions about storage needs.

Data Transfer Rates

Data transfer rates are often expressed in bits per second (bps) or megabits per second (Mbps). When you see a download speed of 10 Mbps, that means the data is being transferred at a rate of 10 million bits per second. To convert this to bytes per second, you divide by 8 (since there are 8 bits in a byte). The difference between bit and byte is essential for understanding the actual speed of your internet connection or the speed at which data is transferred between devices. For example, a 10 Mbps connection equates to approximately 1.25 MBps.

Beyond the Basics: Kilobits, Megabits, and More

While the core difference between bit and byte is fundamental, understanding related units is also important. As mentioned, storage is typically measured in bytes, while data transfer speeds are often expressed in bits. This can sometimes lead to confusion, especially when comparing storage capacity with download speeds.

Kilobits vs. Kilobytes

A kilobit (Kb) is 1,000 bits, and a kilobyte (KB) is 1,024 bytes. The distinction is subtle but important. When you see an internet speed advertised as 100 Mbps, this is megabits per second. To determine how quickly you can download a file, you need to convert this to megabytes per second (MBps) by dividing by 8. The difference between bit and byte plays a key role in this calculation.

Megabits vs. Megabytes

Similarly, a megabit (Mb) is 1,000,000 bits, and a megabyte (MB) is 1,048,576 bytes. This is the core of the difference between bit and byte when dealing with larger data units. Data transfer rates are commonly expressed in megabits per second (Mbps). Storage capacity of files and devices is typically measured in megabytes (MB) or gigabytes (GB).

Gigabits and Terabits

The same principles apply to gigabits (Gb) and terabits (Tb). These units represent larger quantities of bits and are used to measure high-speed data transfer rates, such as those used in fiber optic networks. Understanding the difference between bit and byte helps you to accurately compare these different units and understand the scale of data being transferred.

The Evolution of Bits and Bytes

The concepts of bits and bytes have remained remarkably consistent throughout the history of computing. While the underlying hardware and technologies have evolved dramatically, the fundamental principles of binary representation and data organization have remained central to the digital world. The difference between bit and byte has been consistent, though the application of these units has expanded with increasing processing power and storage capacity.

Early computers used different byte sizes, but the eight-bit byte eventually became the standard. This standardization facilitated the development of software and hardware that could work together seamlessly. Today, the eight-bit byte is a cornerstone of modern computing.

Conclusion: Mastering the Basics

Understanding the difference between bit and byte is essential for anyone navigating the digital landscape. These fundamental units underpin all aspects of data storage, data transfer, and computer operations. From understanding your internet speed to assessing your storage needs, a clear grasp of these concepts will empower you to make informed decisions. By recognizing that a bit is the smallest unit, and a byte is a collection of bits, you’ll be well-equipped to understand and navigate the digital world.

Whether you’re a student, a professional, or simply a curious individual, taking the time to understand the difference between bit and byte is an investment in your digital literacy. It’s a foundational concept that will serve you well as technology continues to evolve. The difference between bit and byte is a gateway to understanding more complex computing concepts.

The difference between bit and byte provides a fundamental understanding of how data is structured, stored, and transferred in the digital world. This knowledge is applicable across various fields, from computer science to telecommunications. Understanding the difference between bit and byte is a key to unlocking the complexities of the digital age.

[See also: Related Article Titles]